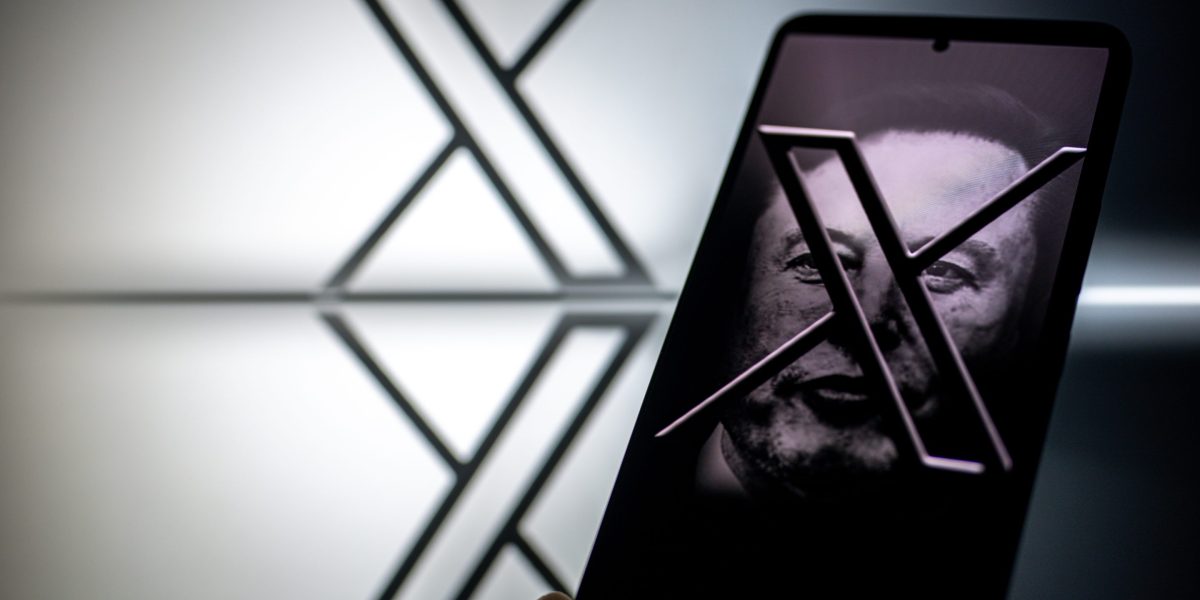

Inside the shifting plan at Elon Musk’s X to build a new team and police a platform ‘so toxic it’s almost unrecognizable’::X’s trust and safety center was planned for over a year and is significantly smaller than the initially envisioned 500-person team.

With bluesky and Mastodon, I really dont see people coming back to the platform. The network effect works both positive and negatively. If less people are using the platform, it will accelerate the move to other platforms.

It seems like a chunk of people (and large institutions) are trying to ‘wait it out’ and ignoring all the awful replies / content

Once people switch, they’re unlikely to go back. Problem is getting them to put in the time to switch.

I’d call it a win when we start seeing “____ said on Mastodon” in articles

I agree.

mirror: https://archive.vn/ghN0z

According to the former X insider, the company has experimented with AI moderation. And Musk’s latest push into artificial intelligence technology through X.AI, a one-year old startup that’s developed its own large language model, could provide a valuable resource for the team of human moderators.

An AI system “can tell you in about roughly three seconds for each of those tweets, whether they’re in policy or out of policy, and by the way, they’re at the accuracy levels about 98% whereas with human moderators, no company has better accuracy level than like 65%,” the source said. “You kind of want to see at the same time in parallel what you can do with AI versus just humans and so I think they’re gonna see what that right balance is.”

I don’t believe that for one second. I’d believe it, if those numbers were reversed, but anyone who uses LLMs regularly, knows how easy it is to circumvent them.

EDIT: Added the paragraph right before the one I originally posted alone, that specifies that their “AI system” is an LLM.

AI is whatever tech companies say it is. They aren’t saying it for the people, like you, that knows it’s horseshit. They are saying it for the investors, politicians, and ignorant folks. They are essentially saying that “AI” (cue jazz hands and glitter) can fix all of their problems, so don’t stop investing in us.

That’s not about LLM. Recently I was doing an AI analysis of which customers will become VIP based on their interactions. The accuracy was coincidentally also 98%. Nowadays people equate AI and LLM, but there’s much more to AI than LLM.

I’m going off of the article, where they state that it’s an LLM. It’s the paragraph right before the one I originally posted:

According to the former X insider, the company has experimented with AI moderation. And Musk’s latest push into artificial intelligence technology through X.AI, a one-year old startup that’s developed its own large language model, could provide a valuable resource for the team of human moderators.

EDIT: I will include it in the original comment for clarity, for those who don’t read the article.

I trust Linda to run Twitter the same way I trust Ashley to run Vought: responsibility and without deference to a creep with a god complex

Weird how firing the trust and safety team on day one could come back to bite him.

Just shut it down until it has been cleaned to be a decent participant of the net again.

This is the best summary I could come up with:

In July, Yaccarino announced to staff that three leaders would oversee various aspects of trust and safety, such as law enforcement operations and threat disruptions, Reuters reported.

According to LinkedIn, a dozen recruits have joined X as “trust and safety agents” in Austin over the last month—and most appeared to have moved from Accenture, a firm that provides content moderation contractors to internet companies.

“100 people in Austin would be one tiny node in what needs to be a global content moderation network,” former Twitter trust and safety council member Anne Collier told Fortune.

And Musk’s latest push into artificial intelligence technology through X.AI, a one-year old startup that’s developed its own large language model, could provide a valuable resource for the team of human moderators.

The site’s rules as published online seem to be a pretextual smokescreen to mask its owner ultimately calling the shots in whatever way he sees it,” the source familiar with X moderation added.

Julie Inman Grant, a former Twitter trust and safety council member who is now suing the company for for lack of transparency over CSAM, is more blunt in her assessment: “You cannot just put your finger back in the dike to stem a tsunami of child sexual expose—or a flood of deepfake porn proliferating the platform,” she said.

The original article contains 1,777 words, the summary contains 217 words. Saved 88%. I’m a bot and I’m open source!